Last week I have been in the Cosyne conference (my second time) and I would like to share my experience. In general, I have to say it has been superb. The conference organization has been great, with a few perks to fix. I appreciate the fresh first keynote talk, as well as the DEI talk. The thing I disliked the most was the relatively sad sandwiches offered for lunch and the first-day poster session. About the contents, I believe that some of the trendy things in the field, such as the rise of RNN as the go-to computational models, and especially the vision of the AI-CompNeuro relationship featured in the panel discussion, are actually worrying. In particular, I do not share at all the general ideas of the panel, which from my point of view had a narrow vision and questionable philosophy at some points.

So that’s the too long, didn’t read part. Let me now dive into the details, in different sections.

Conference organization

In general, it was very good. Talks were very well clustered by topics, sessions were not too long and usually on time, transport to Cascais was well organized, and the app worked as intended. So in this sense, congrats to the organizers.

My main complaint points here are two. First, the first poster session, starting at 20.00 and running up to very late. This is a problem since most dinner places are closed by the time people get tired of listening to posters. I would say this is very inconvenient. Also, drinks were served during the session, which ended with a pair of slightly overexcited persons coming to see my poster towards the end, which does not contribute to end in time.

I think there is a lot of room for improvement in the poster session. Posters could be clustered by topics and split some of the “most orthogonal” ones into two different rooms of the building. The amount of people in the poster lobby is too high. I lost most of my voice the first day, and had to go through throat pain the rest of the conference. I am not the only one!

The sessions during lunch time were definitely more engaging. However, the lunch consisted in not-too-large cold sandwiches. Leaving apart the quality (they got the job done, just meh), in my case I was not 100% full. Not a problem if they served something to eat during the next coffee break, but there was no more food until dinner. The second day I was starving. The third day I went out during the break to grab something. I don’t get why serving breakfast everyday, when most hotels have it, instead of some food during the breaks.

So the organization in general was good, but these details seemed to be slightly overlooked. I think they should be relatively easy to improve.

The rise of RNN as go-to dynamical systems

I had the feeling that in many talks, the model was constructed by fitting a recurrent neural network to reproduce the observed data and then analyse either the network or make other predictions. Although in some cases this is perfectly fine and interesting, in some other cases I believe it was a bit overkill and maybe not needed.

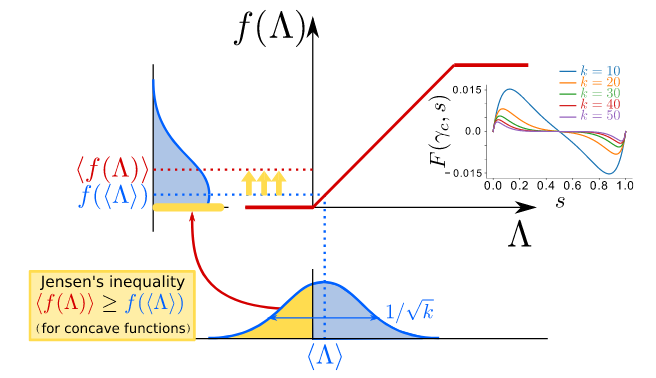

My problem with RNNs is that at the end of the day, they work differently compared to our brain. Nobody guarantees that the mechanism they used to solve the task coincides with the one in the brain, even if they are able to capture a wide range of the dynamics. However, many people just train the RNN on the data and check its dynamics and simple predictions, without further checks. The peak of this philosophy was offered in the keynote talk by Susillo, who argued that by simultaneously training for many tasks and checking the landscape of fixed points we could go further in our understanding of complex dynamics.

However, in the results he presented it seemed that the RNNs are just solving the tasks by adding and moving stable fixed points of different sorts. No oscillations or chaotic dynamics, for example. But in the brain, many computations involve oscillations, noise, and other ingredients missing here. Even worse, in the talk only local bifurcations were presented, but dynamical systems can present global bifurcations, that cannot be detected in any way by just looking at the fixed point structure. So the fixed point landscape is, at best, an incomplete vision of what the system is able to do, and the RNN seems to solve the problems in a fundamentally different way than the brain does, at least in this case. Are RNNs “too narrow” as a vision? When are they useful? How should we check if we are overdoing it? Would a traditional model be more explicative in many cases? Were RNNs used in those cases just because “other people” were doing that, were they simpler? Finally, I want to be clear that I enjoyed a lot Susillo’s talk, as the dynamical systems nerd I am, and I believe the group is doing an excellent work in understanding RNN dynamics; but the conceptual vision behind it for neuroscience, I do not buy that.

As a counterexample, the last talk by Sprekeler during the workshops was fantastic. They used a spiking network, trained to solve a task, finding that the architecture of inhibitory neurons matches the one shown in the experiments. The RNN was from the beginning more “realistic” than others, but what I liked the most was the analysis afterwards. Sprekeler said that they found their solution by optimising for a task using backpropagation, but the brain might do something different, and they looked for a biological mechanism that matched it. I think this is way more relevant for neuroscience purposes, and it ignited a very nice discussion with speakers afterwards about self-organization, optimization, and biological evolution.

Panel discussion

We continue tackling philosophical issues in the panel discussion. The main topic was the relationship between neuroscience and AI and how they feedback each other. The discussion was interesting, but I felt the whole time it suffered from the hammer syndrome: “when you only have a hammer, everything you see are nails”. The panel seemed to agree that most major advances in machine learning were inspired by neuroscience. I will not argue against neuro influences on AI, but many statements from the panel were dismissing of a lot of other knowledge sources. For example, a lot of machine learning classificators rely on trees and graphs; backpropagation itself is not very neuro; the Boltzmann machine, mentioned by the panel as “neuroscience”, is actually based on maxent and the Ising model rather than neurons; Bayesian frameworks come purely from statistics; the idea of genetic algorithms, from evolution; or contributions from cognitive science such as attention focus; take reinforcement learning… Also, many other networks are known to produce computations: distributed artificial systems or genetic networks in bacteria do also process information in ways similar to a neuronal network. The list is large and full of different ideas that have shaped the field. I am no expert on AI, but I don’t agree with the panel that neuroscience has such much weight. I can understand its importance, I understand we are in a neuro conference, but the fact that during the entire discussion no other field was even mentioned was disappointing.

The same applies to influences in the other direction. During the discussion, it seemed that AI is the only thing we need to further develop neuroscience. In particular, the panel extensively discussed how learning about artificial neuronal networks can help us to learn about biological ones. The problem here is that, as I argued in the section above, they are very different in essence, so one has to be very careful about extrapolating artificial to biological networks. The only way to do this, at the end of the day, is to use our knowledge from biology: development, evolution, biochemestry. Evolution is particularly relevant. If a biological network and an artificial one learn differently, the way that the artificial network and evolution optimize is even more distinct. In particular, evolution does not always optimize for the best solution, is subject to huge fluctuations, and different forces come into play (like the direct competition that sometimes appears between natural and sexual selection). Artificial neural networks by themselves cannot account for all these biological factors, unless we borrow more concepts from biology into them. So even maybe the next neuroAI breakthrough might not come from neuroscience!

I want to finish with two notes that I found worrying. First, one of the panelists argued how much neuroscience got from AI because of how much we have improved with our quantitative predictions. In my opinion, this is a poor understanding of how science works: the goal of science is to build models and theories able to explain (approximate the ontology) of the phenomena observed. Qualitative predictions with a strong theoretical background are always superior to quantitative predictions devoid of content. I can train a network to spit out data that predicts what I see, but if I do not understand neither the original system, nor the network, what did I gain? The only way AI can support neuroscience is by generating explorable models that we can dissect to make our explanations go further. The second is the presence (and posture) of the Google panelist, lowkey endorsing this kind of instrumentalist approach which harms scientific advance. About this particular panelist, who also offered a keynote talk: the Brainfuck experiment he presented was in the very same spirit of the first ALife models in the 80s, like Tierra. However, he presented all this as it was original research, not citing a single source about it.

All in all, interesting discussion, but I would have liked to see a slight acknowledgement that neuro/AI are not the only two things in the world and there are way more things we should be paying attention to.

The DEI keynote

A final detail that caught my attention in the panel was that at the beginning of the panel, one of the panelists said he did not know enough about AI to be part of the discussion. Later on, the colleague on his left praises his great ML contributions to field. One second later, the speaker realises, and acknowledges also the contributions of the woman sitting next to him… which is the one invited actually because her main research was the application of ML methods to neuroscience. Just a lapsus, yes, but the kind of lapsus that reminds us that we tend to pay less attention to women.

I also discussed this with other colleagues, but if you go to Cosyne or any other conference, count the questions to speakers done by men and women and be (un)surprised. A lot of stupid questions by over-confident men, too many other people not asking because maybe do not feel comfortable or secure enough to do so. There are clearly some social gaps yet to be fixed.

Because of these reasons, I really welcomed the inclusion of a keynote focused on diversity, equality and inclusion (DEI) in the main program. I think this is a great initiative and similar ideas could be incorporated in the future so scientists can talk and acknowledge some of the problems we face as a community. I have to say, however, I was not very fond of the talk contents themselves. First, the speaker used (in my opinion) a lot of time to talk about her own research and publicise her students. Her research was very relevant to the topic she talked about, but most of the things she presented regarded more the topics themselves than actual results and/or how to implement those strategies to improve the community.On the positive side, I know that there’s robust evidence that to convince the audience more story-driven, personal experiences are better, and she did very well in this regard, making the public to participate and sharing her own experience of sexual abuse. This is not something one often sees in a keynote and it’s great that the speaker put the topic over the table. People were talking about it later and that’s an important achievement.

So, my main criticism would be that there was a lack of conclusions/strategies to improve and insights on the problem’s roots. There was a suggestion for PIs though, which was to run anonymous polls for their students to talk about problems. This is sadly not applicable in many situations. As a Spanish student, I was the only PhD student of my supervisor for a long time, and this was the rule for entire departments and different universities. Talking in general, what I would like to see from these talks is a better analysis on problem’s sources and a greater push for the institutions to develop protocols for these cases to help the victims, rather than moving the responsibility on individual PIs. A bad PI will never implement anything to improve the situation in the lab, and as long as he’s getting students and papers there’s no motivation to do.

However, don’t get me wrong: as I said I’m very happy the talk took place, no matter if I liked it more or not. It was important to have a talk and I fully agree with a lot of the points made there.

Wrap-up

So these are more or less my impressions about Cosyne 2024: organization in general, contents and trends in the science I saw, and the discussion about inclusion and problems in academia. It was a nice conference, and I got a ton of emails from potential collaborators, a lot of new friends, a great time with old friends, a kilo of pastel de nata, and a cold that prevented me from fully enjoying the last party and accompanies me through the post writing.

See you all next time! ~